The Anatomy of an Outcome

By Sarah M. Dunifon

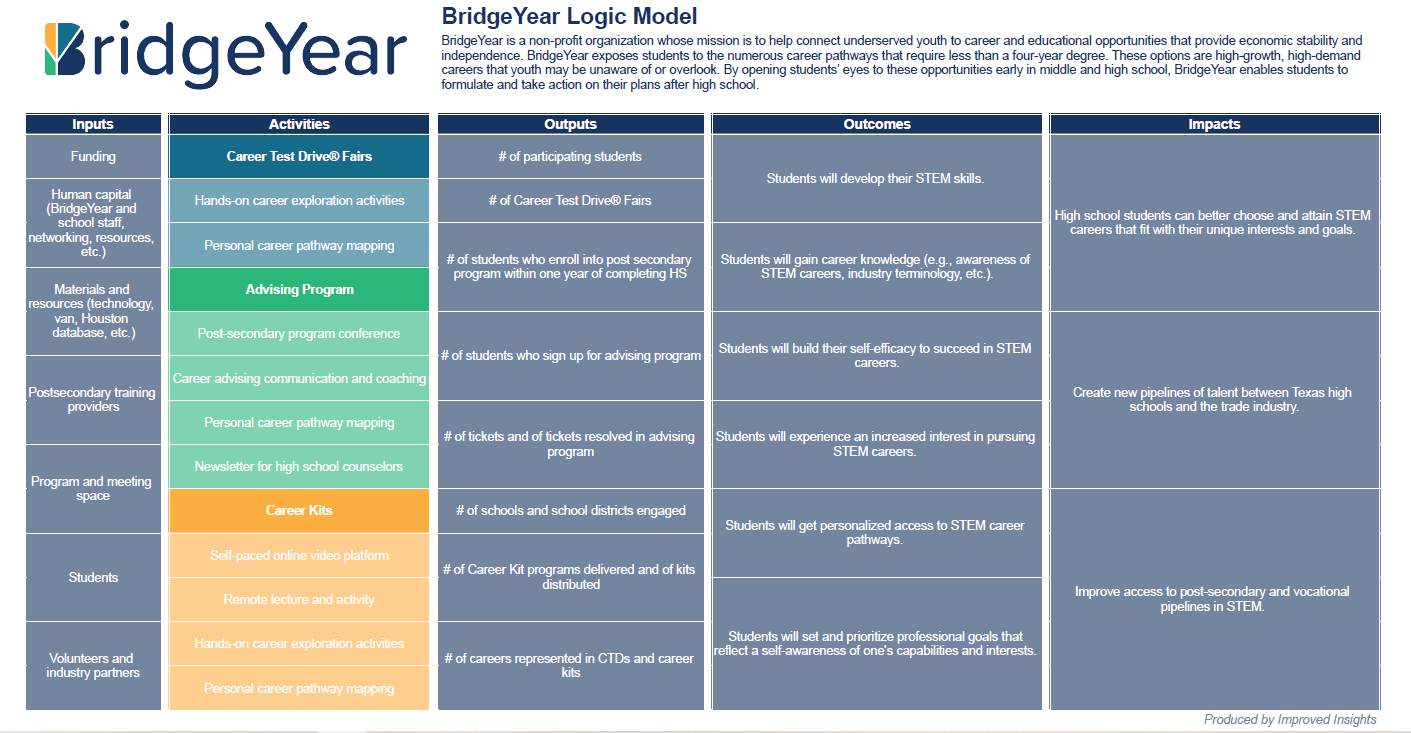

Outcomes are the intended changes that a program or intervention seeks to bring about. Similar to outputs and impacts, outcomes are measurable and specific. They typically speak to change that is reasonable to expect within the program or intervention’s scope. Depending on the program, you might see both long-term and short-term outcomes defined. We typically see outcomes expressed in the future tense. For example, “students will experience an increased interest in pursuing STEM careers.” If everything goes according to plan, this is the change that the program expects.

You might start thinking about outcomes in the program design stage. Perhaps you’re interested in seeing more students in your programs pursue STEM careers (as similar to the example above). To make this happen, you’ve done some research on how informal STEM learning programs can help students pursue STEM careers. You’ve found that there are specific areas, which have been shown to increase student matriculation in STEM degrees and careers, that you’ll want to focus on. For the sake of this example, you’ve chosen to focus on (1) student self-efficacy (or the belief in one’s own abilities), (2) student sense of belonging, and (3) student exposure to STEM careers. These are all actual factors that contribute to students pursuing STEM degrees and careers. The first two are particularly important for students who have been historically underrepresented in STEM. These are all potential outcomes.

Since you know that increasing students’ exposure to STEM careers and building their sense of belonging and self-efficacy in STEM will contribute to them choosing to pursue STEM degrees and jobs, you’ll be designing your program around these elements to ensure you’re doing all you can to affect change.

You’ll want to state these outcomes in program planning tools such as theories of change or logic models and, consequently, tie them to the program activities, outputs, and impacts you ultimately wish to see.

Figure 1: BridgeYear Logic Model

Outcomes x Evaluation = A Perfect Pairing

So now that you know more about how we might define outcomes and what they mean/how we use them in program design, let’s talk about how we use evaluation to understand the extent to which we are achieving these outcomes.

We first engage with outcomes during the project design process to understand what the program hopes to achieve, why it is designed the way it is, and how we might assess how well it is delivering on its goals or promises. Depending on the goals of the evaluation, we’ll draft evaluation questions pertaining to the outcomes to help guide the process.

Let’s use our example outcome from earlier (“students will experience an increased interest in pursuing STEM careers”) to craft an evaluation question. Something like, “To what extent, if at all, are students experiencing an increased interest in pursuing STEM careers after taking part in this program?”

From here, an evaluator would think strategically about the types of data we might wish to collect to help us answer that question as well as what is feasible for the project audience and context. For example, you wouldn’t want to serve 1st-grade students with a 30-question scaled survey. This stage of the process often requires some background research of the construct and appropriate tools or instruments as well as an eye to cultural responsiveness and feasibility for the project timeline, budget, and context.

For our example, let’s say we’ve landed on some really common methods - (1) a pre/post-program survey using some validated survey measures and (2) focus groups. Here we’ve chosen appropriate methods and spent a ton of time identifying the right questions to ask. Once we’ve collected and analyzed our data, we can try to answer our evaluation question.

If we used some numerical scale-based questions in our survey, we might find that the average level of interest that students had regarding pursuing a STEM career increased from the pre-survey to the post. That would be great news! That finding would suggest that there may be something about the program that is causing students to gain a greater interest in STEM careers. Of course, we cannot know everything about the experiences of our audiences outside of our programming. Therefore, it’s important to indicate that much of the findings we uncover are likely correlations, not causations. That being said, it’s still great news.

Now we might visit the focus group data for some qualitative insights. Oh! Here we found that students are saying that they indeed are more interested in STEM careers as a direct result of the program. They cited the many guest speakers, hands-on activities, and shadowing career days as their reasoning for why their interest increased. One student mentioned how important it was to see STEM professionals who look like them, indicating the importance of inviting diverse partners into the program.

In putting the data together, we can answer our initial question pretty clearly - this program is indeed increasing students’ interest in pursuing STEM careers. We can also showcase the differing levels of interest between students individually or by identity (e.g., race or gender), and speak to the individual and collective experiences of the students in the program.

In starting with a well-defined outcome, incorporating that outcome into our program design and evaluation processes, and measuring that outcome in appropriate and responsive ways, we can uncover exciting truths about our work. As we always say - evaluation can offer valuable, data-driven insights that help informal STEM learning organizations improve their work and amplify their impacts.

As you can see, there’s a lot we can do in informal STEM learning to investigate the impacts of our work. Follow along by signing up for our newsletter to learn more.